With the release of Google Play Services 7.8, Google added Mobile Vision APIs. This API provides a framework that allows camera to face track in the custom Android app. The framework consists of face detection that locate and describe visual object in images and videos. here, we’ll see how to integrate this Android face recognition API in an app with Android Face Detection Example.

Table of Contents

Android Face Detection

Face detection feature is actually not a brand new feature on Android. Before Mobile Vision API, it was possible to perform the Android face detection with the help of FaceDetector.Face API, which was introduced at the beginning in Android API level 1. However, the Android Face Detection framework provided now is an improvement of the API.

Therefore, in this Android app tutorial, we’ll see how to get started with Android face detection tutorial.

Getting Started

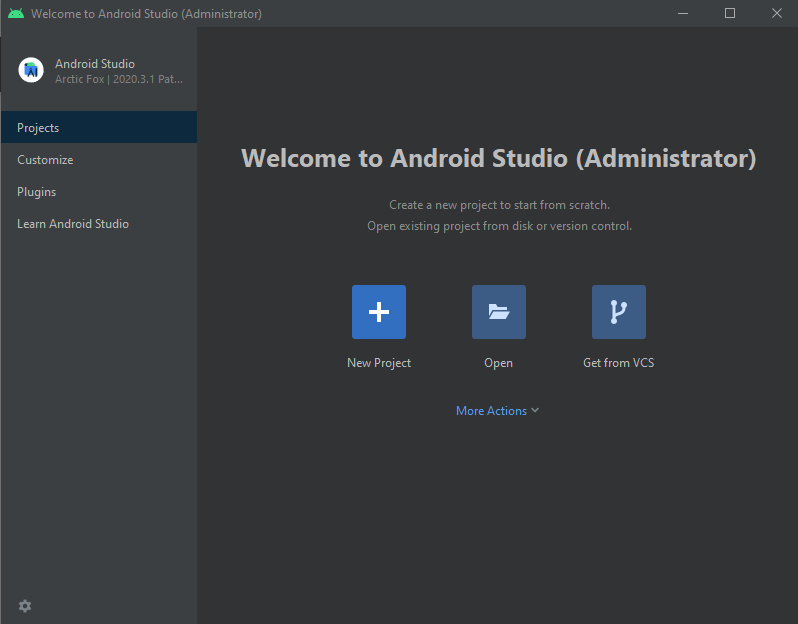

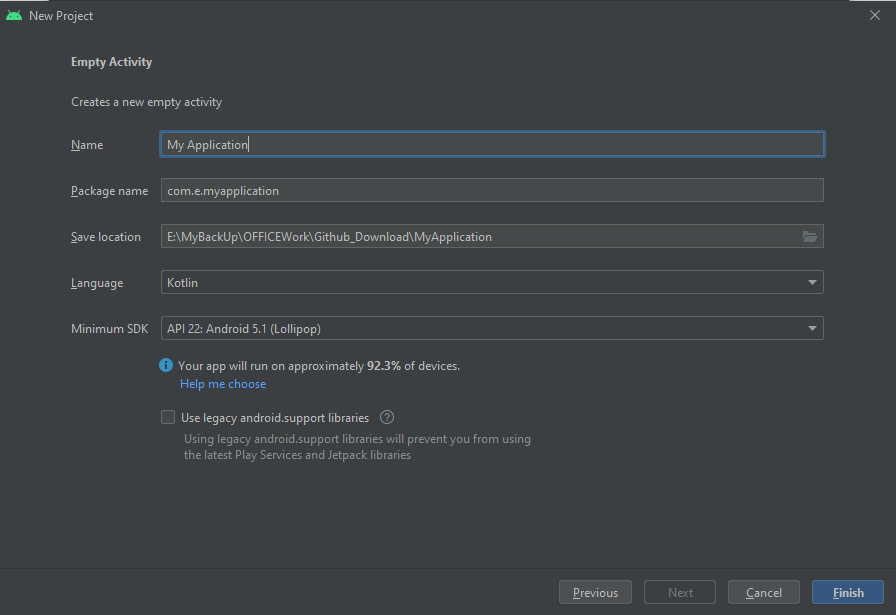

create a new project in Android Studio.

Select your targeted Android device.

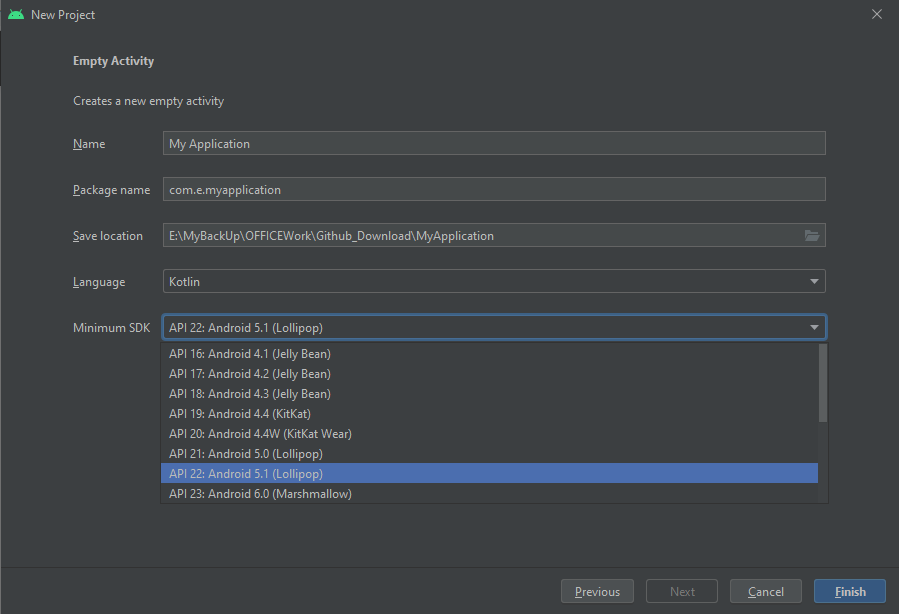

Add Activity to mobile -> Select Empty Activity

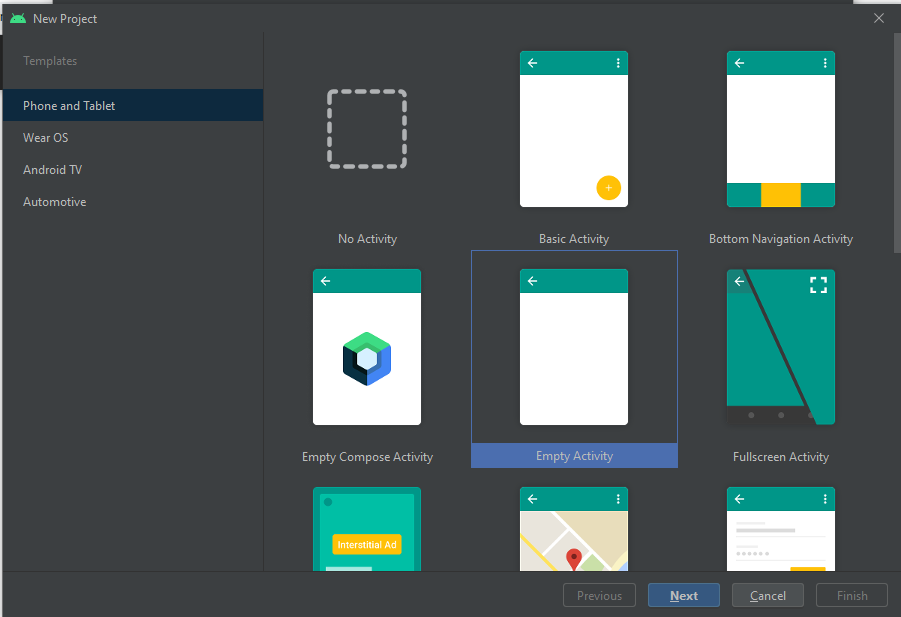

In the final tab, customize the Activity.

Now comes the coding part.

Want to Develop an Android Application?

Looking to Develop An Android app? Get in touch with our experienced Android app developers for a free consultation.

Start Code Integration

activity_main.xml

<?xml version="1.0" encoding="utf-8"?> <RelativeLayout xmlns:android="http://schemas.android.com/apk/res/android" xmlns:tools="http://schemas.android.com/tools" android:id="@+id/activity_main" android:layout_width="match_parent" android:layout_height="match_parent" tools:context="com.facetrackerdemo.MainActivity"> <LinearLayout xmlns:android="http://schemas.android.com/apk/res/android" android:id="@+id/topLayout" android:orientation="vertical" android:layout_width="match_parent" android:layout_height="match_parent" android:keepScreenOn="true"> <com.facetrackerdemo.CameraSurfacePreview android:id="@+id/preview" android:layout_width="match_parent" android:layout_height="match_parent"> <com.facetrackerdemo.CameraOverlay android:id="@+id/faceOverlay" android:layout_width="match_parent" android:layout_height="match_parent"/> </com.facetrackerdemo.CameraSurfacePreview> </LinearLayout> </RelativeLayout>

MainActivity.java

package com.facetrackerdemo;

import android.Manifest;

import android.app.Activity;

import android.app.AlertDialog;

import android.app.Dialog;

import android.content.Context;

import android.content.DialogInterface;

import android.content.pm.PackageManager;

import android.support.design.widget.Snackbar;

import android.support.v4.app.ActivityCompat;

import android.support.v7.app.AppCompatActivity;

import android.os.Bundle;

import android.util.Log;

import android.view.View;

import com.google.android.gms.common.ConnectionResult;

import com.google.android.gms.common.GoogleApiAvailability;

import com.google.android.gms.vision.CameraSource;

import com.google.android.gms.vision.MultiProcessor;

import com.google.android.gms.vision.Tracker;

import com.google.android.gms.vision.face.Face;

import com.google.android.gms.vision.face.FaceDetector;

import java.io.IOException;

public class MainActivity extends AppCompatActivity {

private static final String TAG = "FaceTrackerDemo";

private CameraSource mCameraSource = null;

private CameraSurfacePreview mPreview;

private CameraOverlay cameraOverlay;

private static final int RC_HANDLE_GMS = 9001;

private static final int RC_HANDLE_CAMERA_PERM = 2;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

mPreview = (CameraSurfacePreview) findViewById(R.id.preview);

cameraOverlay = (CameraOverlay) findViewById(R.id.faceOverlay);

int rc = ActivityCompat.checkSelfPermission(this, Manifest.permission.CAMERA);

if (rc == PackageManager.PERMISSION_GRANTED) {

createCameraSource();

} else {

requestCameraPermission();

}

}

private void requestCameraPermission() {

final String[] permissions = new String[]{Manifest.permission.CAMERA};

if (!ActivityCompat.shouldShowRequestPermissionRationale(this,

Manifest.permission.CAMERA)) {

ActivityCompat.requestPermissions(this, permissions, RC_HANDLE_CAMERA_PERM);

return;

}

final Activity thisActivity = this;

View.OnClickListener listener = new View.OnClickListener() {

@Override

public void onClick(View view) {

ActivityCompat.requestPermissions(thisActivity, permissions,

RC_HANDLE_CAMERA_PERM);

}

};

Snackbar.make(cameraOverlay, "Camera permission is required",

Snackbar.LENGTH_INDEFINITE)

.setAction("OK", listener)

.show();

}

private void createCameraSource() {

Context context = getApplicationContext();

FaceDetector detector = new FaceDetector.Builder(context)

.setClassificationType(FaceDetector.ALL_CLASSIFICATIONS)

.build();

detector.setProcessor(

new MultiProcessor.Builder<>(new MainActivity.GraphicFaceTrackerFactory())

.build();

);

if (!detector.isOperational()) {

Log.e(TAG, "Face detector dependencies are not yet available.");

}

mCameraSource = new CameraSource.Builder(context, detector)

.setRequestedPreviewSize(640,480)

.setFacing(CameraSource.CAMERA_FACING_FRONT)

.setRequestedFps(30.0f)

.build();

@Override

protected void onResume() {

super.onResume();

startCameraSource();

}

@Override

protected void onPause() {

super.onPause();

mPreview.stop();

}

@Override

protected void onDestroy() {

super.onDestroy();

if (mCameraSource != null) {

mCameraSource.release();

}

}

@Override

public void onRequestPermissionsResult(int requestCode, String[] permissions, int[] grantResults) {

if (requestCode != RC_HANDLE_CAMERA_PERM) {

Log.d(TAG, "Got unexpected permission result: " + requestCode);

super.onRequestPermissionsResult(requestCode, permissions, grantResults);

return;

}

if (grantResults.length != 0 && grantResults[0] == PackageManager.PERMISSION_GRANTED) {

Log.d(TAG, "Camera permission granted - initialize the camera source");

createCameraSource();

return;

}

Log.e(TAG, "Permission not granted: results len = " + grantResults.length +

" Result code = " + (grantResults.length > 0 ? grantResults[0] : "(empty)"));

DialogInterface.OnClickListener listener = new DialogInterface.OnClickListener() {

public void onClick(DialogInterface dialog, int id) {

finish();

}

};

AlertDialog.Builder builder = new AlertDialog.Builder(this);

builder.setTitle("FaceTrackerDemo")

.setMessage("Need Camera access permission!")

.setPositiveButton("OK", listener)

.show();

}

private void startCameraSource() {

int code = GoogleApiAvailability.getInstance().isGooglePlayServicesAvailable(

getApplicationContext());

if (code != ConnectionResult.SUCCESS) {

Dialog dlg =

GoogleApiAvailability.getInstance().getErrorDialog(this, code, RC_HANDLE_GMS);

dlg.show();

}

if (mCameraSource != null) {

try {

mPreview.start(mCameraSource, cameraOverlay);

} catch (IOException e) {

Log.e(TAG, "Unable to start camera source.", e);

mCameraSource.release();

mCameraSource = null;

}

}

}

private class GraphicFaceTrackerFactory implements MultiProcessor.Factory<Face> {

@Override

public Tracker<Face> create(Face face) {

return new MainActivity.GraphicFaceTracker(cameraOverlay);

}

}

private class GraphicFaceTracker extends Tracker<Face> {

private CameraOverlay mOverlay;

private FaceOverlayGraphics faceOverlayGraphics;

GraphicFaceTracker(CameraOverlay overlay) {

mOverlay = overlay;

faceOverlayGraphics = new FaceOverlayGraphics(overlay);

}

@Override

public void onNewItem(int faceId, Face item) {

faceOverlayGraphics.setId(faceId);

}

@Override

public void onUpdate(FaceDetector.Detections detectionResults, Face face) {

mOverlay.add(faceOverlayGraphics);

faceOverlayGraphics.updateFace(face);

}

@Override

public void onMissing(FaceDetector.Detections detectionResults) {

mOverlay.remove(faceOverlayGraphics);

}

@Override

public void onDone() {

mOverlay.remove(faceOverlayGraphics);

}

}

} CamaraOverlay.class

package com.facetrackerdemo; import android.content.Context; import android.graphics.Canvas; import android.util.AttributeSet; import android.view.View; import com.google.android.gms.vision.CameraSource; import java.util.HashSet; import java.util.Set; public class CameraOverlay extends View { private final Object mLock = new Object(); private int mPreviewWidth; private float mWidthScaleFactor = 1.0f; private int mPreviewHeight; private float mHeightScaleFactor = 1.0f; private int mFacing = CameraSource.CAMERA_FACING_FRONT; private SetmOverlayGraphics = new HashSet<>(); public static abstract class OverlayGraphic { private CameraOverlay mOverlay; public OverlayGraphic(CameraOverlay overlay) { mOverlay = overlay; } public abstract void draw(Canvas canvas); public float scaleX(float horizontal) { return horizontal * mOverlay.mWidthScaleFactor; } public float scaleY(float vertical) { return vertical * mOverlay.mHeightScaleFactor; } public float translateX(float x) { if (mOverlay.mFacing == CameraSource.CAMERA_FACING_FRONT) { return mOverlay.getWidth() - scaleX(x); } else { return scaleX(x); } } public float translateY(float y) { return scaleY(y); } public void postInvalidate() { mOverlay.postInvalidate(); } } public CameraOverlay(Context context, AttributeSet attrs) { super(context, attrs); } public void clear() { synchronized (mLock) { mOverlayGraphics.clear(); } postInvalidate(); } public void add(OverlayGraphic overlayGraphic) { synchronized (mLock) { mOverlayGraphics.add(overlayGraphic); } postInvalidate(); } public void remove(OverlayGraphic overlayGraphic) { synchronized (mLock) { mOverlayGraphics.remove(overlayGraphic); } postInvalidate(); } public void setCameraInfo(int previewWidth, int previewHeight, int facing) { synchronized (mLock) { mPreviewWidth = previewWidth; mPreviewHeight = previewHeight; mFacing = facing; } postInvalidate(); } @Override protected void onDraw(Canvas canvas) { super.onDraw(canvas); synchronized (mLock) { if ((mPreviewWidth != 0) && (mPreviewHeight != 0)) { mWidthScaleFactor = (float) canvas.getWidth() / (float) mPreviewWidth; mHeightScaleFactor = (float) canvas.getHeight() / (float) mPreviewHeight; } for (OverlayGraphic overlayGraphic : mOverlayGraphics) { overlayGraphic.draw(canvas); } } } }

CameraSurfacePreview.class

package com.facetrackerdemo; import android.content.Context; import android.content.res.Configuration; import android.util.AttributeSet; import android.util.Log; import android.view.SurfaceHolder; import android.view.SurfaceView; import android.view.ViewGroup; import com.google.android.gms.common.images.Size; import com.google.android.gms.vision.CameraSource; import java.io.IOException; public class CameraSurfacePreview extends ViewGroup { private static final String TAG = "SPACE-CAMERA"; private Context mContext; private SurfaceView mSurfaceView; private boolean mStartRequested; private boolean mSurfaceAvailable; private CameraSource mCameraSource; private CameraOverlay mOverlay; public CameraSurfacePreview(Context context, AttributeSet attrs) { super(context, attrs); mContext = context; mStartRequested = false; mSurfaceAvailable = false; mSurfaceView = new SurfaceView(context); mSurfaceView.getHolder().addCallback(new SurfaceCallback()); addView(mSurfaceView); } public void start(CameraSource cameraSource) throws IOException { if (cameraSource == null) { stop(); } mCameraSource = cameraSource; if (mCameraSource != null) { mStartRequested = true; startIfReady(); } } public void start(CameraSource cameraSource, CameraOverlay overlay) throws IOException { mOverlay = overlay; start(cameraSource); } public void stop() { if (mCameraSource != null) { mCameraSource.stop(); } } public void release() { if (mCameraSource != null) { mCameraSource.release(); mCameraSource = null; } } private void startIfReady() throws IOException { if (mStartRequested && mSurfaceAvailable) { mCameraSource.start(mSurfaceView.getHolder()); if (mOverlay != null) { Size size = mCameraSource.getPreviewSize(); int min = Math.min(size.getWidth(), size.getHeight()); int max = Math.max(size.getWidth(), size.getHeight()); if (isPortraitMode()) { // Swap width and height sizes when in portrait, since it will be rotated by // 90 degrees mOverlay.setCameraInfo(min, max, mCameraSource.getCameraFacing()); } else { mOverlay.setCameraInfo(max, min, mCameraSource.getCameraFacing()); } mOverlay.clear(); } mStartRequested = false; } } private class SurfaceCallback implements SurfaceHolder.Callback { @Override public void surfaceCreated(SurfaceHolder surface) { mSurfaceAvailable = true; try { startIfReady(); } catch (IOException e) { Log.e(TAG, "Could not start camera source.", e); } @Override public void surfaceDestroyed(SurfaceHolder surface) { SurfaceAvailable = false; } @Override public void surfaceChanged(SurfaceHolder holder, int format, int width, int height) {}s @Override protected void onLayout(boolean changed, int left, int top, int right, int bottom) { int width = 320; int height = 240; if (mCameraSource != null) { Size size = mCameraSource.getPreviewSize(); if (size != null) { width = size.getWidth(); height = size.getHeight(); } } if (isPortraitMode()) { int tmp = width; width = height; height = tmp; } final int layoutWidth = right - left; final int layoutHeight = bottom - top; int childWidth = layoutWidth; int childHeight = (int)(((float) layoutWidth / (float) width) * height); if (childHeight > layoutHeight) { childHeight = layoutHeight; childWidth = (int)(((float) layoutHeight / (float) height) * width); } for (int i = 0; i < getChildCount(); ++i) { getChildAt(i).layout(0, 0, childWidth, childHeight); } try { startIfReady(); } catch (IOException e) { Log.e(TAG, "Could not start camera source.", e); } } private boolean isPortraitMode() { int orientation = mContext.getResources().getConfiguration().orientation; if (orientation == Configuration.ORIENTATION_LANDSCAPE) { return false; } if (orientation == Configuration.ORIENTATION_PORTRAIT) { return true; } Log.d(TAG, "isPortraitMode returning false by default"); return false; } }

FaceOverlayGraphics.class

package com.facetrackerdemo; import android.graphics.Canvas; import android.graphics.Color; import android.graphics.Paint; import com.google.android.gms.vision.face.Face; class FaceOverlayGraphics extends CameraOverlay.OverlayGraphic { private static final float FACE_POSITION_RADIUS = 10.0f; private static final float ID_TEXT_SIZE = 40.0f; private static final float ID_Y_OFFSET = 50.0f; private static final float ID_X_OFFSET = -50.0f; private static final float BOX_STROKE_WIDTH = 5.0f; private static final int[] COLOR_CHOICES = { Color.BLUE, Color.CYAN, Color.GREEN, Color.MAGENTA, Color.RED, Color.WHITE, Color.YELLOW }; private static int mCurrentColorIndex = 0; private Paint mFacePositionPaint; private Paint mIdPaint; private Paint mBoxPaint; private volatile Face mFace; private int mFaceId; private float mFaceHappiness; FaceOverlayGraphics(CameraOverlay overlay) { super(overlay); mCurrentColorIndex = (mCurrentColorIndex + 1) % COLOR_CHOICES.length; final int selectedColor = COLOR_CHOICES[mCurrentColorIndex]; mFacePositionPaint = new Paint(); mFacePositionPaint.setColor(selectedColor); mIdPaint = new Paint(); mIdPaint.setColor(selectedColor); mIdPaint.setTextSize(ID_TEXT_SIZE); mBoxPaint = new Paint(); mBoxPaint.setColor(selectedColor); mBoxPaint.setStyle(Paint.Style.STROKE); mBoxPaint.setStrokeWidth(BOX_STROKE_WIDTH); } void setId(int id) { mFaceId = id; } void updateFace(Face face) { mFace = face; postInvalidate(); } @Override public void draw](Canvas canvas) { Face face = mFace; if (face == null]) { return; } // Draws a circle at the position of the detected face, with the face's track id below. float x = translateX(face.getPosition().x + face.getWidth() / 2); float y = translateY(face.getPosition().y + face.getHeight() / 2); canvas.drawCircle(x, y, FACE_POSITION_RADIUS, mFacePositionPaint); canvas.drawText( "id: " + mFaceId, x + ID_X_OFFSET, y + ID_Y_OFFSET, mIdPaint); canvas.drawText( "happiness: " + String.format( "%.2f", face.getIsSmilingProbability()), x - ID_X canvas.drawText("right eye: " + String.format("%.2f", face.getIsRightEyeOpenProbability()), x + ID_X_OFFSET * 2, y + ID_Y_OFFSET * 2, mIdPaint); canvas.drawText("left eye: " + String.format("%.2f", face.getIsLeftEyeOpenProbability()), x - ID_X_OFFSET*2, y - ID_Y_OFFSET*2, mIdPaint); // Draws a bounding box around the face. float xOffset = scaleX(face.getWidth() / 2.0f); float yOffset = scaleY(face.getHeight() / 2.0f); float left = x - xOffset; float top = y - yOffset; float right = x + xOffset; float bottom = y + yOffset; canvas.drawRect(left, top, right, bottom, mBoxPaint); } }

And done!

Want to Create an Android Application?

Validate your app idea and get a free quote.

However, if you face any problem, you can get in touch with professionals offering custom android app development services.

Face recognition feature extends the face detection. So any face that appeared in a video can also be tracked. and adding this feature can improve the exposure and the need of manual facelock will be eliminated. therefore, if you’re developing an Android app that involves a great deal for the camera, then it’s important that you hire Android app developer to implement this feature in your app.

Grab a free copy of Android Face Recognition Example From Github.