The viral wave of FaceApp has brought an urge to create the best FaceApp alternatives in the app world. With the guidance of one of our expert photo editing app developers, we have rounded up the 7 best FaceApp alternatives of 2024. If you are keen on knowing close competitors of the app along with their features to develop FaceApp like apps of your own, this blog will give you some intricate information regarding the photo app genre.

Space-O Technologies has been in the mobile application development genre since 2010, which gives us more experience and an eye for analyzing the upcoming trends in the app world. As there is an evergreen segment of apps like Uber, the same goes for the FaceApp alternatives.

The technological enhancement of the apps is reaching new heights with the help of AR, Artificial Intelligence, and Neural Networks. In the first place, no one had the idea to have such hilarious photo edits in just a click on their 5-inch handy screens.

What is FaceApp?

FaceApp is a photo editing app and is available for both OS platforms- iOS and Android. Along with iOS and Android, there is a Faceapp alternative for PC. To access the application on a PC, use Android Emulator.

Also, Faceapp is developed by Wireless Lab, a company based in Russia. The app is known for generating highly realistic transformations of human faces in photographs by using neural networks based on artificial intelligence. The app has become one of the most popular photo apps.

Want to Make an App like FaceApp?

Want to validate your app idea? Want to get a free consultation from an expert?

So, if you are planning to enter the photo editing app development genre, you need to go through the best FaceApp alternatives or apps like FaceApp to better understand their offerings and your biggest competition in the market.

7 Best FaceApp Alternatives to Look Upon Before Developing Photo Editing App

Here is a list of seven FaceApp alternatives.

| Apps | Reviews (Apple App Store) | Download |

|---|---|---|

|

| 4.8 420.3K Reviews | |

|

| 4.8 59.7K Reviews | |

|

| 4.3 1.3K Reviews | |

|

| 4.3 8.3K Reviews | |

|

| 4.8 19.4K Reviews | |

|

| 4.7 126K Reviews | |

|

| 4.5 1.6K Reviews |

Reface

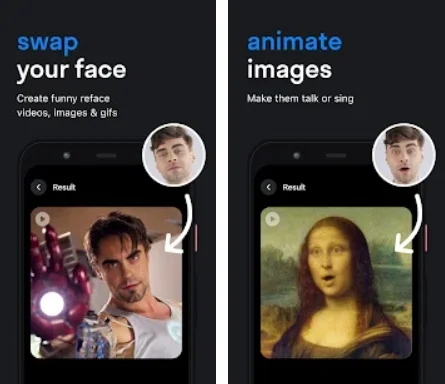

Reface is one of the choices for over 10 million users across the globe and is considered one of the popular face apps. Using this best faceapp free alternative, you can easily switch your face with a celebrity or a pop star in seconds with the app. In short, use this app to put someone’s face on another picture. Also, the other features of this app let you play with live face swaps and gender swaps. You can make apps like reface, which offers realistic face swap videos and GIFs with your selfies. Even reface and with the help of Reface app.

Image Credit: Reface

Features of the Reface App

- Easy to swap your face to celebrities or movie characters

- Can replace your face with memes using face editor

- Face swaps with new videos and gifs posted every day

- Easy to share face swapped clips or funny meme as a gif or video to social media platforms

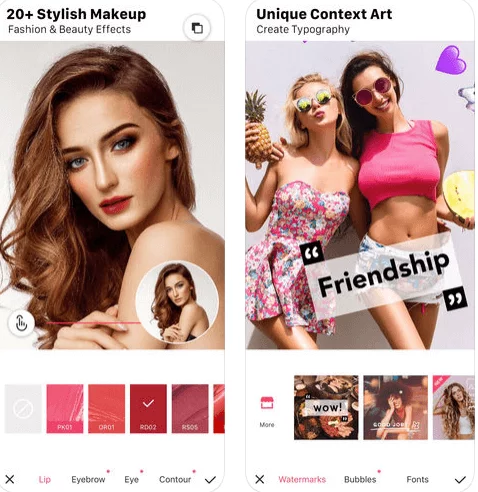

Meitu

Meitu is a China-based free apps like FaceApp that has over 1 million subscribers. This is a free face editing app and is considered one of the best FaceApp web alternative apps. Also, this is considered the best Faceapp pc alternative app for users. Additionally, this is one of the face filter apps which offers an image editing application along with a cool UI/UX design with brilliant filters that give instant beautification to the photo just like the FaceApp Hollywood filter. A user can also customize body features and has Snapchat-like capabilities of enlarging eyes, an option that paints a photo similar to stepping out of an anime.

Image Credit: Meitu

Features of the Meitu- The Face Filter App

- AI technology detects facial features and adds cute motion stickers or hand-drawn effects to the face when taking a selfie through the app.

- The app has a cloud service with smart customer service while collecting and saving an enormous amount of data in real-time.

- Meitu app has patented M-Face recognition technology which learns every detail of the face for precise retouching.

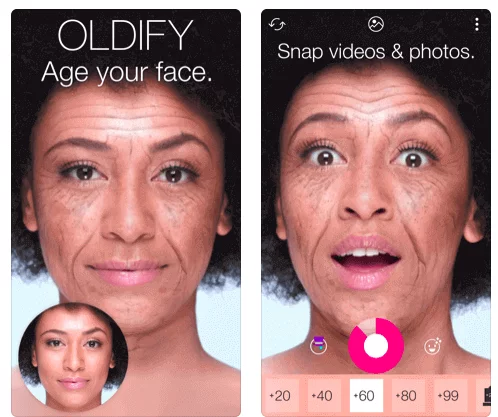

Oldify

If you are searching for the alternative to FaceApp then, you should try Oldify. Oldify is an app from a series of apps by Apptly. This similar app like faceapp is old age face effects app company that develops AR-based photo and video editing apps like Oldify or faces changer app to make a face look older, Stachify to add a mustache on a face, Vampify to transform a face to look like a Vampire, and so on.

Oldify is probably the best old-age face effects app that makes you look old. This app is certainly better than FaceApp, since, Oldify offers many effects and easy to use interface with a lot more old images to work on. The app also has amazing animation and lets the users record their videos while they make the coughing and yawning noises like an old person.

Image Credit: Oldify

Features of the Oldify App

- The app transforms a user appearance in real-time with live video filters like a zombie, astronaut, or panda.

- Using Augmented Reality technology, the subtle filters like making beard on a face, or a tiger mask is also possible.

- The mathematical algorithms of the app are highly optimized to achieve stellar performance on both modern and on legacy ones.

AgingBooth

What will you look like when you are old? This is one of the best free apps similar to faceapp. This old age face effects app was discovered in 2010 when the AgingBooth app was developed. Currently, this face changer app is in 2nd rank in the App Store and in the top 50 Google Play store list. As per the Sensor Tower data, the aging app has been downloaded by over 2.2 million times in the last few weeks on iOS alone. AgingBooth is one of the best apps similar to Faceapp, which has an amazing face aging app that instantly ages face photos. If you are searching for faceapp alternatives, you should surely try the AgingBooth app.

Image Credit: AgingBooth

Features of the AgingBooth App

- The app can be used without internet and the face can be transformed instantly.

- The photos can be shared on Facebook, Twitter, or through Email, MMS.

- It auto-crops the photo using face detection and allows to choose a face from the many same photos.

InkHunter

If anyone is confused about how the tattoo will look on him, this app is designed for them. InkHunter app idea was originated during 48 hours of hackathon back in July 2014 by a group of software engineers based in Ukraine. The app lets a user take a snap of his AR tattoo and gives an idea before it is inked forever. This is one of the best FaceApp alternatives has a diverse library of sketches or a user can upload their own artistic designs to refer how the actual will look like.

Image Credit: InkHunter

Features of the InkHunter App

- The app can portray a tattoo on any part of the body from different angles.

- The tattoos look real with the use of advanced photo editor and can make it seem real.

- The app also has features like adaptive custom sketch uploader, the ability to remove photos/sketches from the gallery, and navigation and search features for the tattoo gallery.

Cupace

This photo editor app is all about cutting and pasting face in a photo. A user can create funny photos, memes, and also swap faces in the photo app. The photo can be decorated with in-built stickers, emojis, and text. Cupace is capable of manually extracting faces from any image if a user does not want to go ahead with face swapping or to add the face to any inanimate object.

Image Credit: Cupace

Features of the Cupace App

- It has a very simple algorithm of cutting and pasting the face. The app allows a user to magnify into the image so that the face cutout is as accurate as possible.

- The same photo can be pasted multiple times as well as reuse it on multiple photos.

Face Swap Live

This is a New York-based app and one of the best faceapp alternatives that allows users to swipe face with their friends in real-time. The user can swap face with celebrities, record videos or photos. It is one of the great faceapp alternatives apps out there in the market. Since, this app switches faces live right from the camera’s video feed. There is no need for static photos. The app, in 2016, was ranked as 2nd best selling paid app in the App Store.

Image Credit: Face Swap Live

Features of the Face Swap Live App

- The app has a mast costume mode where the users can pick and choose a hat, glasses, beard for the photo to look hilarious.

- 3D filters and photos are available in the app that let a user edit their photos like a professional.

Want to Make an App like FaceApp?

Need to validate your app idea or consult with an expert? Get a free consultation now!

Let’s see some of the commonly asked questions about developing the best FaceApp alternatives

Frequently Asked Questions

Is it a good idea to invest in Face App Alternative application?

Yes, it is a good idea to invest in the FaceApp alternative application because these applications are highly popular among people today. Making use of the latest technologies like Artificial Intelligence, Machine Learning, and Augmented Reality, you can develop your own FaceApp alternative application and earn a good amount of revenue.

What are some of the features to consider for the FaceApp alternative?

Here’s a list of some common yet important features to consider when creating the best FaceApp alternative:

- Live video filters like a zombie, astronaut, or anything

- Advanced video and photo editing tools

- Offline support

- 3D filters and photos

- Social sharing support

How much time does it take to develop a best FaceApp alternative?

To develop an app like FaceApp, you might need around 4 to 6 months. The exact time to develop any FaceApp alternative totally depends upon the features and functionalities you want to integrate.

Have you developed any photo editing apps?

Yes, we have developed over 50 photo and video editing apps with some of the unique features and functionalities that you can explore in our portfolio section. If you are looking forward to developing FaceApp alternatives for your business, feel free to reach us.

Want to Create Photo Editing App?

The photo editing app or photo video app world has undergone a huge transformation after the development of FaceApp. The technologies and algorithms are made seamlessly to give smooth transformations to a user’s face. In such a technologically apt genre, you will need an enterprise mobile application development company like us at your disposal.

We have contributed over 4400 mobile apps in the app world. Our dedicated team of app developers, who have experience of developing over 50 video and photo apps, develop customized photo editor apps with exclusive features like editing tools, filters, cartoon effects, and photo collages, and manage and share tons of photos. We have developed apps like TopIt and GalleryGuardian.

TopIt The App

- Generate video and image-based private and public competitions

- Create challenges against friends, rivals, and celebrities.

- Over 5000 downloads on Google Play Store

Our team will surely guide you about Faceapp alternatives app development and help you to develop the apps according to your need. If you have any query or confusion regarding developing photo editing apps like VSCO, Snapseed, planning to develop FaceApp alternatives apps, the cost to develop an app like FaceApp, how to hire mobile app developers for FaceApp alternative app development, fill our contact us form to get in touch with our sales representatives. We do take care of UI/UX design, app development, quality assurance, and maintenance. The consultation is free of cost.